Equal employment opportunities may not be part of a computer’s calculations, but one engineer is trying to change that

When you apply for a job, chances are your resume has been through numerous automated screening processes powered by hiring algorithms before it lands in a recruiter’s hands. These algorithms look at things like work history, job title progression and education to weed out resumes. There are pros and cons to this – employers are eager to harness the artificial intelligence (AI) and big data captured by the algorithms to speed up the hiring process. But depending on the data used, automated hiring decisions can be very biased.

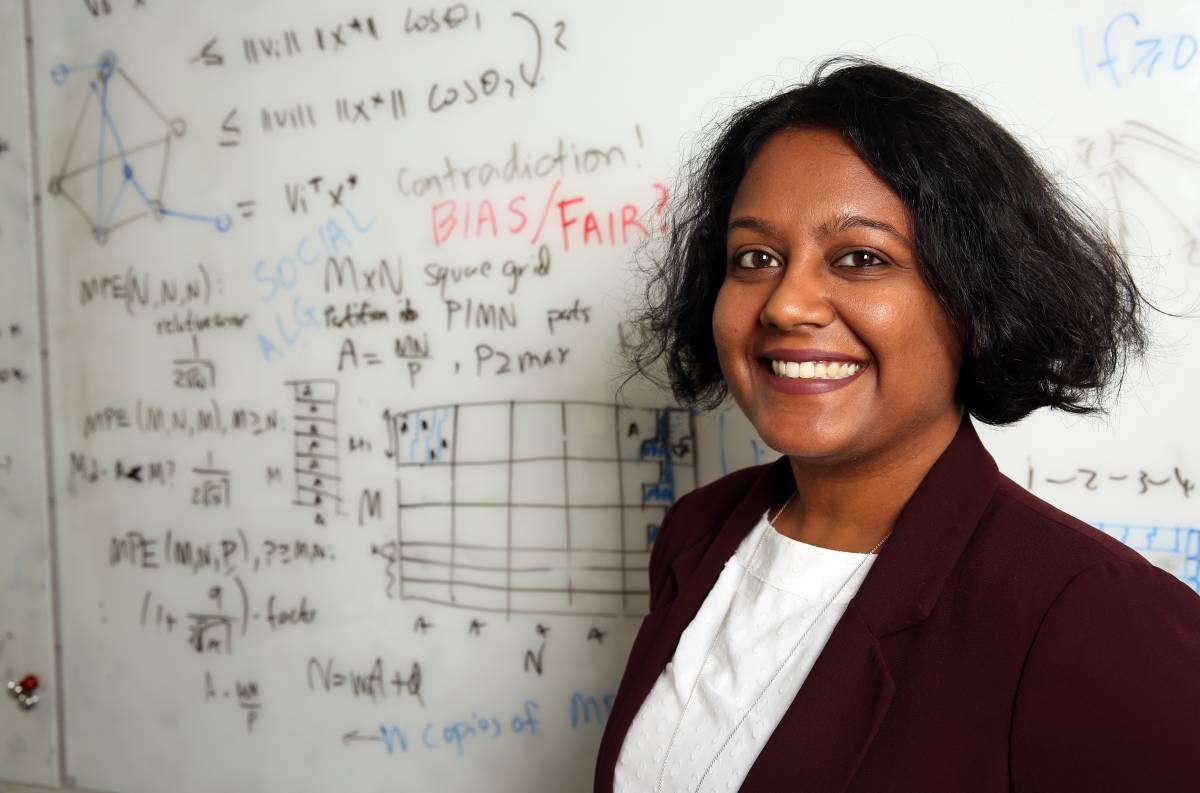

“Algorithms learn based on data sets, but the data is generated by humans who often exhibit implicit bias,” explains Swati Gupta, an industrial engineering researcher at Georgia Tech who’s work focuses on algorithmic fairness. “Our hope is that we can use machine learning with rigorous mathematical analysis to fix the bias in areas like hiring, lending and school admissions.”

And job-related algorithms not only affect hiring; they also influence the types of jobs a candidate might see online. A LinkedIn survey found that men typically apply to jobs even if they aren’t qualified, whereas women are more conservative when sending out their resumes. So, when someone is on LinkedIn and a message pops up saying “jobs recommended for you,” a woman might see fewer job posts than a man. Those jobs also might pay less. Because men traditionally apply to jobs that pay more, the search engine algorithms are going to target them with higher paying job ads.

Another problem area is fairness in lending, where algorithms have learned to charge higher interest rates to people who do not comparison shop. While lending discrimination has historically been caused by face-to-face human bias, pricing disparities are increasingly the result of algorithms that use machine learning to target applicants who might shop around less with higher-priced loans, according to a Berkeley study. Black and Latino borrowers pay roughly seven basis points higher interest on loans than White and Asian borrowers due to algorithmic bias.

So, how can computer scientists and engineers teach an algorithm to be fairer?

First, Gupta is working on defining what makes an algorithm biased – what data is going in that is creating the bias? After she determines the problematic data, adjustments can be made in the algorithm to make it fair. Then she programs the algorithm to understand that some sets of data might be biased. Knowing this, the algorithm can make fairer decisions.

“Algorithms must be adjusted to be more socially conscious,” said Gupta. “We have to consider the impact these algorithms can have on our lives and ensure that discriminatory practices don’t get created.”

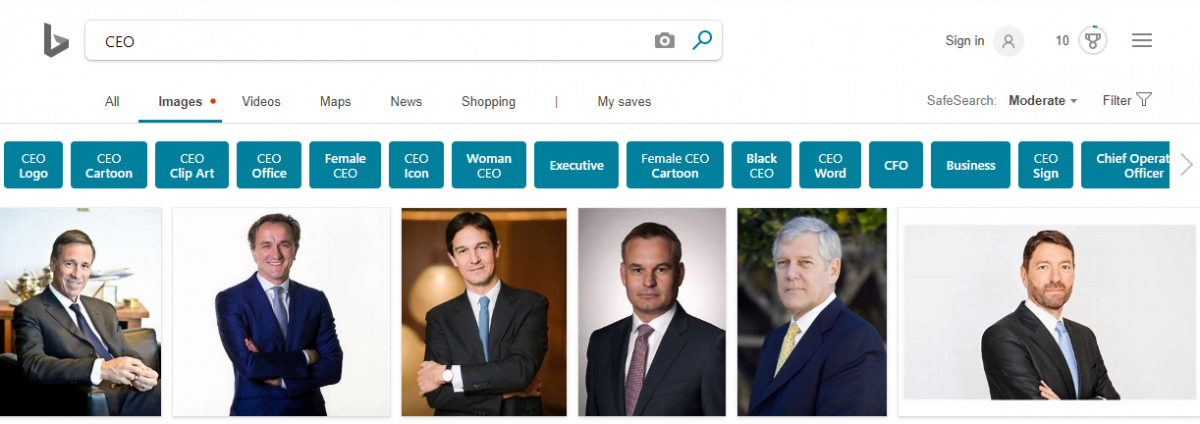

These biases are problematic in search engine functionality as well. When Google realized an image search for “CEO” brought up only male executives, the company decided something needed to change. Since algorithms learn from historical data, and most CEOs in the world are in fact male, the search engine returned images of male CEOs in the results. But Google made the socially conscious decision to adjust the algorithm to show women too. Currently, an image search for “CEO” on Bing only shows males.

For Gupta, fairness has been a battle all her life. She recalls being a young woman in India wanting to be an engineer, but would get questions like, ‘How will you care for a family if you’re an engineer? Why don’t you become an artist instead? Do you really want such a technical job?’

“I was constantly being told what roles I could and couldn’t have because of my gender, and I don’t want other young girls growing up with those same expectations,” said Gupta. “I love math, and I’m passionate about fairness, and the fact that I can help correct some of these algorithmic biases is really exciting.”

Gupta has noticed that the discussion of fairness in algorithms is largely missing from the computer science and engineering curriculum. In logistics classes, students are asked to focus on minimizing costs for companies, but not to consider what is ethical.

“Socially conscious problem solving is not being taught early on, and it’s really important to breed this line of thinking into our students,” said Gupta. “One of my academic goals is to create thoughtfulness in math. I would like my students to consider ethical implications of their algorithms before deploying them in practice.”

Gupta’s research plan is to continue creating and correcting algorithms that drive social good, promote diversity, and shift our societal thinking in a more responsible direction.