In the quest to ensure drones and other autonomous vehicles operate safely, engineers have to out-think the malicious attackers who might try to take over.

(text and background only visible when logged in)

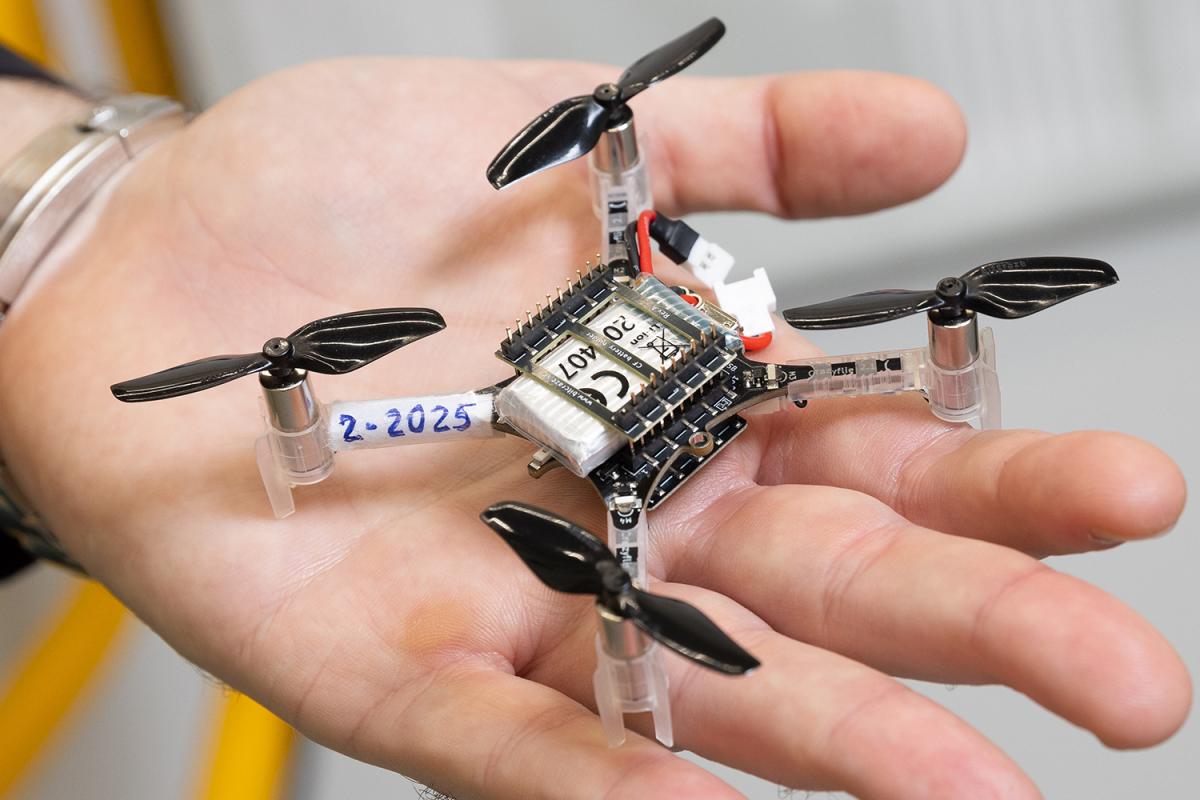

Kyriakos Vamvoudakis (left) and postdoctoral fellow José Magalhães test drones and controllers for performance and safety.

(text and background only visible when logged in)

Control theory, cyber-physical systems, game theory, and reinforcement learning sound like complex engineering and mathematical concepts. And they are.

They’re also on the front lines of protecting airborne and underwater drones as well as autonomous passenger vehicles from hackers who might try to take them over and cause death and destruction.

Kyriakos G. Vamvoudakis is at the center of that work. He’s an expert in the design of control software for autonomous vehicles that protects against those who would co-opt the vehicles for nefarious purposes.

“We strive to create resilient controllers that can anticipate attackers, mitigate their impact, and decipher their intentions,” said Vamvoudakis, the Dutton-Ducoffe Endowed Professor in the Daniel Guggenheim School of Aerospace Engineering. “We apply game theoretic techniques to secure sensors and actuators in large-scale cyber-physical systems, such as an airplane or multiple airplanes, multiple drones, flying taxis, cars.

“They’ll have different challenges, but our work is more general, and then we try to validate it in different kinds of platforms.”

The sensors and actuators he mentioned are critical tools — and prime targets for malicious actors. The goal is to efficiently gather data from onboard instruments, process it, and then adjust control surfaces or rotors accordingly. This involves establishing acceptable data boundaries from sensing devices and defining the movements of actuators.

Vamvoudakis’ controllers are meticulously designed to ensure performance and safety: preventing drones from colliding with buildings or other structures, enabling safe operation around other air traffic, and ensuring safety for human pilots or, eventually, passengers. To achieve that, Vamvoudakis, his students, and collaborators, must adopt the mindset of bad actors who would try to exploit these vehicles.

“We have to model them, yes,” he said. “We have to always take into consideration what is the worst thing they can do when we compute our defending control policies.”

This is where game theory is valuable for their work. It’s often a zero-sum game — a winner and a loser. Because the outcome is either that the attacker wins and takes over all or part of the drone/controller or the defender/controller wins by fending off the attack. Designing a system that anticipates the absolute worst case means the control software can mitigate any attack — and the autonomous vehicle always wins against an attacker.

“Since you know the worst case, you can mitigate anything below this worst case, and you’ll be able to guarantee a form of robustness and resiliency,” Vamvoudakis said.

Game theory encompasses the study of numerous “agents” within a system, each with distinct objectives that may influence or conflict with one another. In scenarios involving multiple attackers and defenders, according to Vamvoudakis, this could manifest as a local zero-sum game between an individual vehicle and an attacker, while simultaneously constituting a broader non-zero-sum game.

The ultimate objective, then, is to identify what’s called the Nash equilibrium. Named after mathematician John Nash, the equilibrium represents the set of control policies where no agent can improve their outcome by unilaterally altering their strategy.

It’s a big ask to compute such complex scenarios, and it’s not always strictly necessary. Vamvoudakis’ tools model adversaries based on how smart they are. It’s an approach that yields significant efficiency in computing the defenses the controller needs to deploy.

(text and background only visible when logged in)

It’s often a zero-sum game — a winner and a loser. ... Designing a system that anticipates the absolute worstcase means the control software can mitigate any attack — and the autonomous vehicle always wins against an attacker.

(text and background only visible when logged in)

“Different attackers have different, let’s say, levels of intelligence,” he said. “One attacker might be just a random ‘player,’ so it doesn’t do a lot. Another attack might be very smart, with inside information about our systems, and then we have to mitigate those effects. So, I find out how smart the attacker is, and then I can compute the policy such that I always win this ‘game.’”

Vamvoudakis noted adversaries can be extremely stealthy. Imagine they’ve planted a piece of malicious code that “hides” in a wind gust. In other words, the attack is triggered by rough air or some turbulence in an effort to disrupt the system surreptitiously. These are the kinds of malevolent realities he and his team have to consider.

How might an attack and defense play out?

Say an adversary tries to override one of a drone’s rotors or fools one of its sensors to feed bad data into the controller. Vamvoudakis’ resilient controller would identify the attack and isolate that rotor or sensor, relying on the other redundant systems to safely operate.

One of the group’s projects uses geofencing to protect restricted areas — perhaps airspace over a military facility or even the White House. With support from the National Science Foundation, they’re developing techniques to safely land a drone if it’s hijacked and enters a protected area.

The team also works on the security of autonomous and semiautonomous cars and electric vehicles. Vamvoudakis described a possible attack where malware inserted into an EV misreports the status of the battery with the intent of stranding the driver far from charging resources. His team is designing charging infrastructure that’s secure from attackers who might use it to insert such a code.

With support from the Department of Defense, Vamvoudakis is developing intelligent agents to work in tandem with human security analysts to monitor drone data for anomalies that indicate an attack. His lab also has funding from NASA, several branches of the military, the National Science Foundation, national labs, NATO, and the Department of Energy.

The machine learning models his team develops are “closed-loop,” meaning they constantly incorporate feedback from operations to improve how they perform. There’s no pretraining of the models; they work in real time to assess what’s happening with the vehicle and how to react.

Vamvoudakis and his team are constantly publishing and sharing their work with the scientific community — including in seven books so far. As a result, they always assume adversaries have more knowledge about the system they’re attacking than the researchers do, and they take a working-from-behind approach in building their models.

“I find it fascinating to try to defend systems against adversaries and try to be one step ahead of them,” Vamvoudakis said. “I will always have a job to do.”

(text and background only visible when logged in)

(text and background only visible when logged in)

Related Stories

Engineering 21st Century Flight

The new ideas and emerging designs that will carry us into the future thanks to the work of Georgia Tech engineers.

Aerospace Engineers Join $6.7M NASA Project to Speed Development of Safe Autonomous Air Vehicles

Kyriakos Vamvoudakis and collaborators will work on autonomous control systems for drones, air taxis, and other aircraft.

Racing to New Heights

Meet the campus club that’s become a powerhouse in collegiate drone racing, built a community of drone enthusiasts, and inspires the next generation of pilots and engineers.

(text and background only visible when logged in)

Helluva Engineer

This story originally appeared in the Spring 2025 issue of Helluva Engineer magazine.

Not where we are, but where we’re going — whether it’s here on the ground or millions of miles away. Georgia Tech engineers are shaping the rapidly shifting future of how we’ll fly people and stuff. Our engineers are helping get humans to the moon and, eventually, Mars, while creating the tools to help us unravel some of our solar system’s deepest mysteries. The sky is no limit in the Georgia Tech College of Engineering. Take off with us into The Aerospace Issue.