Georgia Tech researchers are using AI to quickly train exoskeleton devices, making it much more practical to develop, improve, and ultimately deploy wearable robots for people with impaired mobility.

With a new AI tool, Georgia Tech researchers can create fully functional exoskeleton controllers without spending many hours in specialized labs collecting data from humans wearing the devices. The advance means it's far more practical to design and deploy useful exoskeletons and robotic limbs.

To make useful wearable robotic devices that can help stroke patients or people with amputated limbs, the computer brains driving the systems must be trained. That takes time and money — lots of time and money. And researchers need specially equipped labs to collect mountains of human data for training.

Even when engineers have a working device and brain, called a controller, changes and improvements to the exoskeleton system typically mean data collection and training start all over again. The process is expensive and makes bringing fully functional exoskeletons or robotic limbs into the real world largely impractical.

Not anymore, thanks to Georgia Tech engineers and computer scientists.

They’ve created an artificial intelligence tool that can turn huge amounts of existing data on how people move into functional exoskeleton controllers. No data collection, retraining, and hours upon hours of additional lab time required for each specific device.

Their approach has produced an exoskeleton brain capable of offering meaningful assistance across a huge range of hip and knee movements that works as well as the best controllers currently available. Their worked was published Nov. 19 in Science Robotics.

“It's a massive efficiency improvement in terms of the research mission. But what I think is especially exciting is in the real world,” said Aaron Young, associate professor in Georgia Tech’s George W. Woodruff School of Mechanical Engineering. “Let’s say a startup company wants to deploy an exoskeleton device, and that device goes through four iterations during development. This is now easy to accommodate without massive data collections needed every time they make a change to the device. That’s basically what would have been required if we were stuck with where the technology was a year ago.”

The study was led by former Ph.D. student Keaton Scherpereel, who studied with Young and Omer Inan in the School of Electrical and Computer Engineering. All the researchers are affiliated with Georgia Tech’s Institute for Robotics and Intelligent Machines.

They also worked with Matthew Gombolay in the School of Interactive Computing, whose team helped develop the AI system that made the advance possible.

The researchers used a kind of AI called a CycleGAN that was originally developed to map satellite images of a specific spot to ground-level images of the same place. It’s the same kind of AI that can transform an image of horses into one of zebras.

Instead of connecting images, the AI connected large datasets of people moving around without exoskeletons to how they would move while wearing a device. Then it used that data to anticipate how much robotic assistance to offer at the hip and knee.

“We had this idea of a stepping-stone domain. You can take the unlabeled biomechanics data — people moving around — bring them into a simulation and artificially add sensors to them as if they were wearing an exoskeleton. Then you can imagine what those sensor readings would look like,” said Gombolay, associate professor in the School of Interactive Computing. “The ultimate goal is to use those sensor readings to predict how much biological torque they are generating at their hips or knees or whatever joint you're looking at.

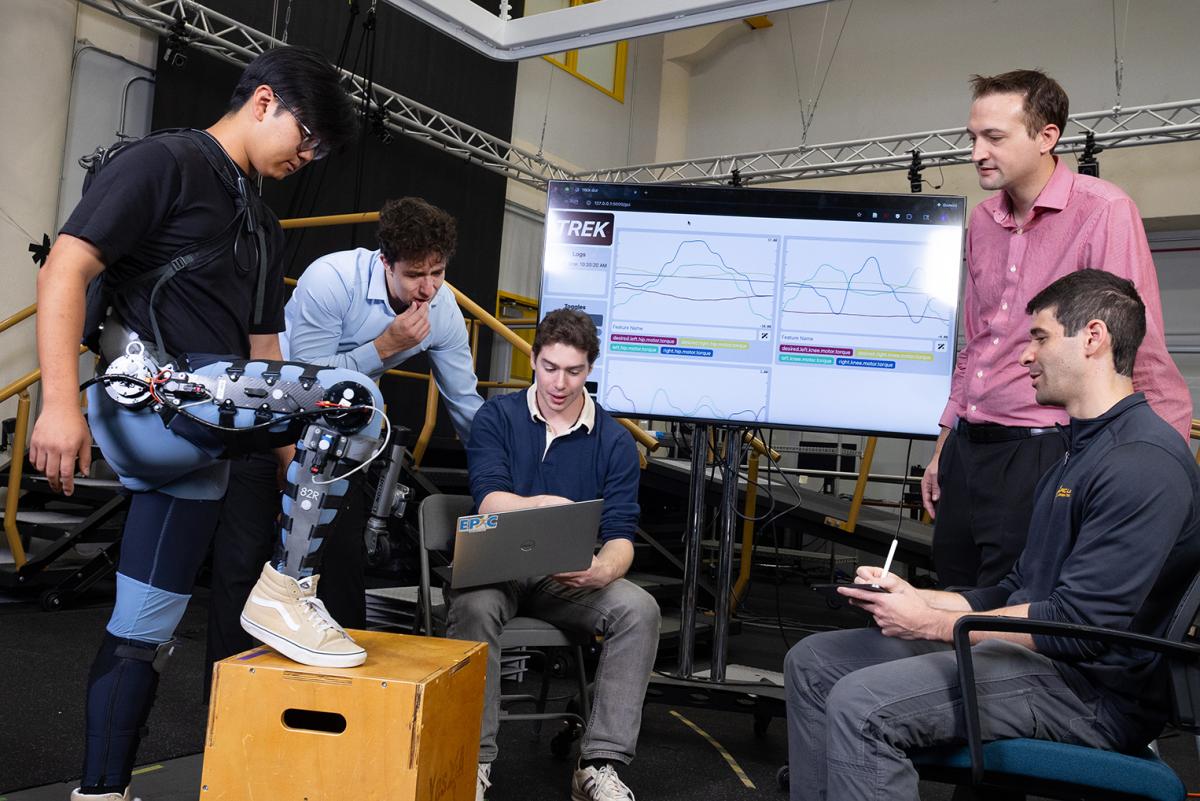

The researchers used this lower-limb exoskeleton to test their new approach using huge amounts of existing data on how people move to create functional exoskeleton controllers that provide meaningful assistance. With their AI tool, the controllers don't require hours and hours of additional training and data collection with each specific exoskeleton device.

“We've taken cheap, large-scale data and learned how to map that to a domain where it's expensive to collect it for a specific system. And this could be repurposed for any exoskeleton.”

Young likened it to a translator, taking information in one “language” and making it possible for any specific robot to understand the information and use it.

The new technique builds on his team’s previous work developing highly capable AI exoskeleton controllers. But those efforts required years of collecting data on people moving with the exoskeleton before the controller could provide useful assistance. This new class of AI-powered model makes it possible to skip all that.

Like the earlier “task-agnostic” controllers, the AI model isn’t predicting what the user is trying to do — climb stairs or step off a curb, for instance. Instead, it’s instantaneously detecting and estimating how the user’s joints are moving and how much effort they’re exerting. Then the exoskeleton boosts those efforts by as much as 20%.

“One of the things I'm most excited about is seeing how this accelerates not just our lab’s research but also opens the door for our types of controllers to be deployed by roboticists who don't have access to the equipment that we do,” said Scherpereel, now a senior controls engineer at Skip. “This has the potential to increase the speed and the number of researchers who can work on this. And with that combination, who knows what cool things can be built on this foundation.”

The Science Robotics study proved the AI translation worked with a leg exoskeleton that provided power at the hip and knee joints. But the implications reach much further.

“Broadly speaking, we'll be able to take the advances in this paper and apply them to upper limb systems, prosthesis systems, and potentially even autonomous robots,” Young said. “That is the big advance. And this is where Matthew's team has really helped us by creating the AI that does this translation. Now we have the ability to collaborate with industry partners and get these controllers deployed in real systems that people use, hopefully in the near future.”

(text and background only visible when logged in)

About the Research

This research was supported by the National Science Foundation, grant Nos. 2233164, 2328051, and DGE-2039655. Any opinions, findings, and conclusions or recommendations expressed in this material are those of the authors and do not necessarily reflect the views of any funding agency.

Citation: Keaton L. Scherpereel et al. Deep domain adaptation eliminates costly data required for task-agnostic wearable robotic control. Sci. Robot. 10, eads8652(2025). 10.1126/scirobotics.ads8652

Preeminence in Research

Related Content

No Matter the Task, This New Exoskeleton AI Controller Can Handle It

Researchers created a deep learning-driven controller that helps users in real-world tasks, even those it wasn’t trained for.

Researchers created a deep learning-driven controller that helps users in real-world tasks, even those it wasn’t trained for.

Universal Controller Could Push Robotic Prostheses, Exoskeletons Into Real-World Use

Aaron Young’s team has developed a wear-and-go approach that requires no calibration or training.

Aaron Young’s team has developed a wear-and-go approach that requires no calibration or training.

To Help Recover Balance, Robotic Exoskeletons Have to be Faster Than Human Reflexes

Researchers at Georgia Tech and Emory found wearable ankle exoskeletons helped subjects improve standing balance only if they activated before muscles fired.

Researchers at Georgia Tech and Emory found wearable ankle exoskeletons helped subjects improve standing balance only if they activated before muscles fired.